Synnefo Administrator’s Guide¶

This is the complete Synnefo Administrator’s Guide.

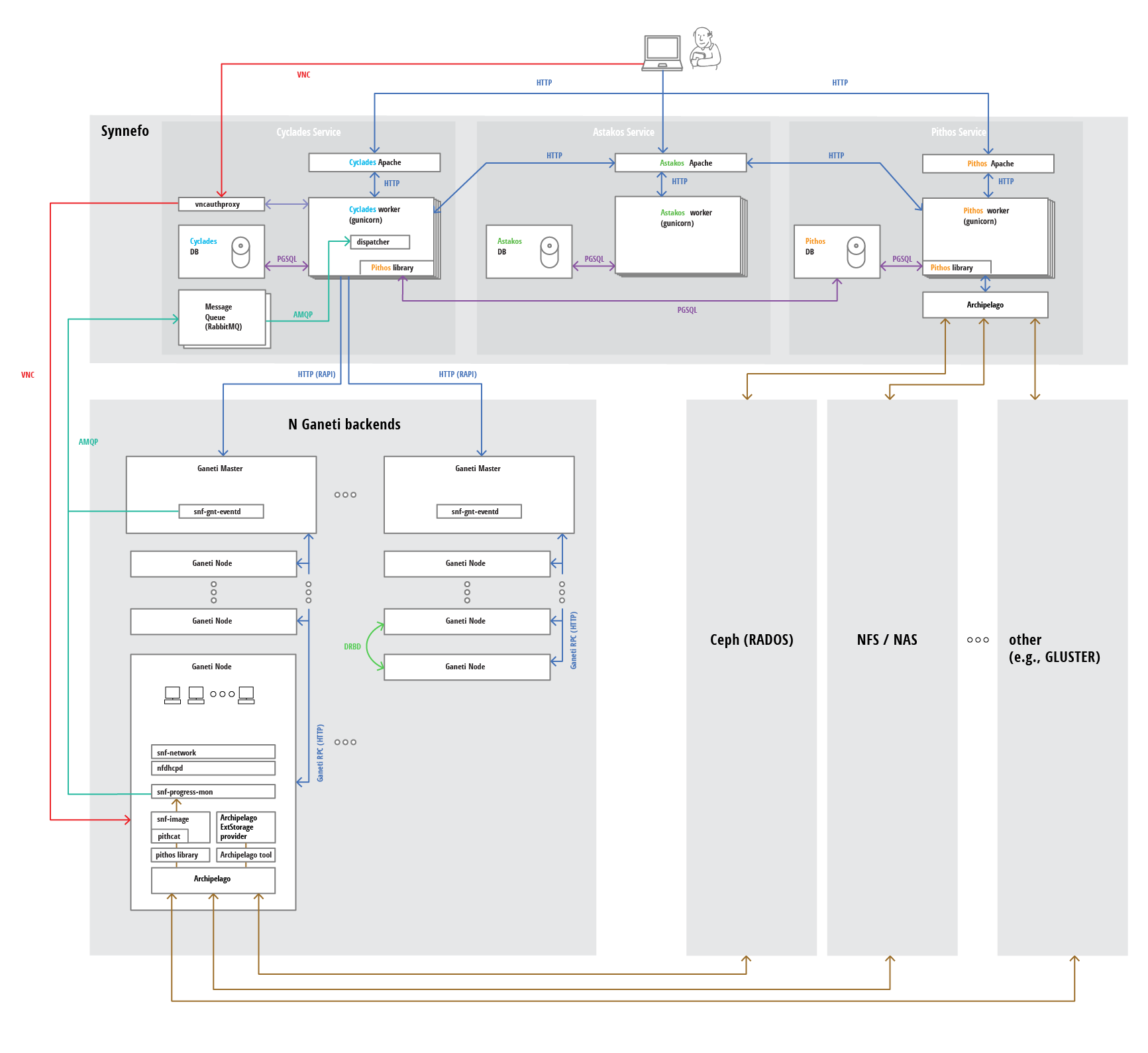

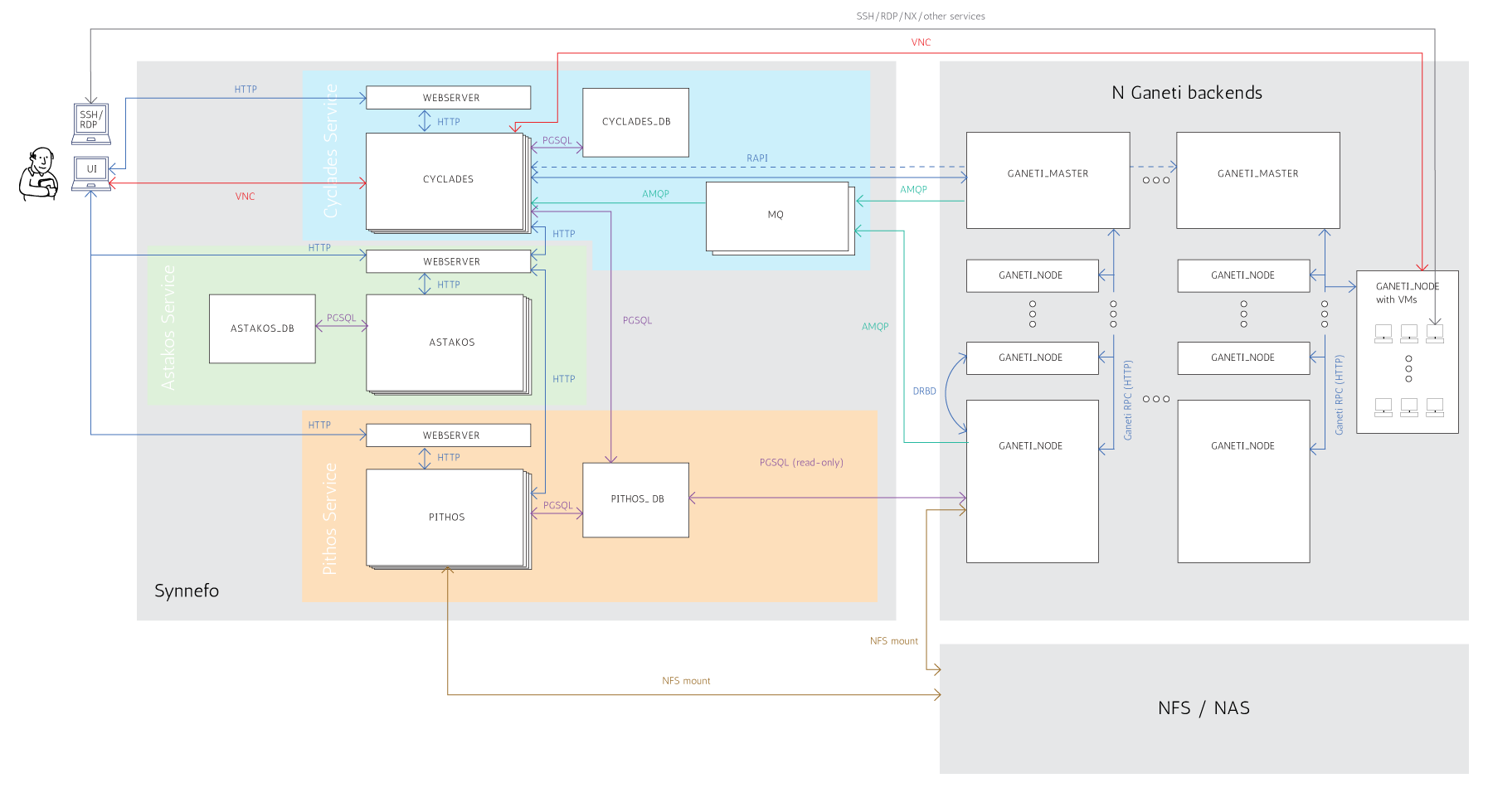

General Synnefo Architecture¶

The following figure shows a detailed view of the whole Synnefo architecture and how it interacts with multiple Ganeti clusters. We hope that after reading the Administrator’s Guide you will be able to understand every component and all the interactions between them.

Required system users and groups (synnefo, archipelago)¶

Since v0.16, Synnefo requires an Archipelago installation for the Pithos backend. Archipelago on the other hand, supports both NFS and RADOS as storage backends. This leads us to various components that have specific access rights.

Synnefo ships its own configuration files under /etc/synnefo. In

order those files not to be compromised, they are owned by

root:synnefo with group read access (mode 640). Since Gunicorn,

which serves Synnefo by default, needs read access to the configuration

files and we don’t want it to run as root, it must run with group

synnefo.

Warning

If you want to add your own configuration file, do not forget to

declare the appropriate encoding by adding the line

## -*- coding: utf-8 -*- at the beggining of the file.

Cyclades and Pithos talk to Archipelago over some named pipes under

/dev/shm/posixfd. This directory is created by Archipelago, owned by

the user/group that Archipelago runs as, and at the same time it must be

accessible by Gunicorn. Therefore we let Gunicorn run as synnefo

user and Archipelago as archipelago:synnefo (by default it rus as

archipelago:archipelago). Beware that the synnefo user and

group is created by snf-common package.

Archipelago must have a storage backend to physically store blocks, maps and locks. This can be either an NFS or a RADOS cluster.

NFS backing store¶

In case of NFS, Archipelago must have permissions to write on the

exported dirs. We choose to have /srv/archip exported with

blocks, maps, and locks subdirectories. They are owned by

archipelago:synnefo and have g+ws access permissions. So

Archipelago will be able to read/write in these directories. We could

have the whole NFS isolated from Synnefo (owned by

archipelago:archipelago with 640 access permissions) but we

choose not to (e.g. some future extension could require access to the

backing store directly from Synnefo).

Due to NFS restrictions, all Archipelago nodes must have common uid for

the archipelago user and common gid for the synnefo group. So

before any Synnefo installation, we create them here in advance. We

assume that ids 200 and 300 are available across all nodes.

# addgroup --system --gid 200 synnefo

# adduser --system --uid 200 --gid 200 --no-create-home \

--gecos Synnefo synnefo

# addgroup --system --gid 300 archipelago

# adduser --system --uid 300 --gid 300 --no-create-home \

--gecos Archipelago archipelago

Normally the snf-common and archipelago packages are responsible

for creating the required system users and groups.

Identity Service (Astakos)¶

Authentication methods¶

Astakos supports multiple authentication methods:

- local username/password

- LDAP / Active Directory

- SAML 2.0 (Shibboleth) federated logins

Shibboleth Authentication¶

Astakos can delegate user authentication to a Shibboleth federation.

To setup shibboleth, install package:

apt-get install libapache2-mod-shib2

Change appropriately the configuration files in /etc/shibboleth.

Add in /etc/apache2/sites-available/synnefo-ssl:

ShibConfig /etc/shibboleth/shibboleth2.xml

Alias /shibboleth-sp /usr/share/shibboleth

<Location /ui/login/shibboleth>

AuthType shibboleth

ShibRequireSession On

ShibUseHeaders On

require valid-user

</Location>

and before the line containing:

ProxyPass / http://localhost:8080/ retry=0

add:

ProxyPass /Shibboleth.sso !

Then, enable the shibboleth module:

a2enmod shib2

After passing through the apache module, the following tokens should be available at the destination:

eppn # eduPersonPrincipalName

Shib-InetOrgPerson-givenName

Shib-Person-surname

Shib-Person-commonName

Shib-InetOrgPerson-displayName

Shib-EP-Affiliation

Shib-Session-ID

Astakos keeps a map of shibboleth users using the value of the REMOTE_USER

header, passed by the mod_shib2 module. This happens in order to be able to

identify the astakos account the shibboleth user is associated to, every time

the user logs in from an affiliate shibboleth IdP.

The shibboleth attribute which gets mapped to the REMOTE_USER header can be

changed in /etc/shibboleth/shibboleth2.xml configuration file.

<!-- The ApplicationDefaults element is where most of Shibboleth's SAML bits are defined. -->

<ApplicationDefaults entityID="https://sp.example.org/shibboleth"

REMOTE_USER="eppn persistent-id targeted-id">

Warning

Changing mod_shib2 REMOTE_USER to map to different shibboleth

attributes will probably invalidate any existing shibboleth enabled users in

astakos database. Those users won’t be able to login to their existing accounts.

Finally, add ‘shibboleth’ in ASTAKOS_IM_MODULES list. The variable resides

inside the file /etc/synnefo/20-snf-astakos-app-settings.conf

Twitter Authentication¶

To enable twitter authentication while signed in under a Twitter account, visit dev.twitter.com/apps.

Click Create an application.

Fill the necessary information and for callback URL give:

https://node1.example.com/astakos/ui/login/twitter/authenticated

Edit /etc/synnefo/20-snf-astakos-app-settings.conf and set the

corresponding variables ASTAKOS_TWITTER_TOKEN and

ASTAKOS_TWITTER_SECRET to reflect your newly created pair.

Finally, add ‘twitter’ in ASTAKOS_IM_MODULES list.

Google Authentication¶

To enable google authentication while signed in under a Google account, visit https://code.google.com/apis/console/.

Under API Access select Create another client ID, select Web application, expand more options in Your site or hostname section and in Authorized Redirect URIs add:

https://node1.example.com/astakos/ui/login/google/authenticated

Edit /etc/synnefo/20-snf-astakos-app-settings.conf and set the

corresponding variables ASTAKOS_GOOGLE_CLIENT_ID and

ASTAKOS_GOOGLE_SECRET to reflect your newly created pair.

Finally, add ‘google’ in ASTAKOS_IM_MODULES list.

Working with Astakos¶

User registration¶

When a new user signs up, he/she is not directly marked as active. You can see his/her state by running (on the machine that runs the Astakos app):

$ snf-manage user-list

More detailed user status is provided in the status field of the user-show command:

$ snf-manage user-show <user-id>

id : 6

uuid : 78661411-5eed-412f-a9ea-2de24f542c2e

status : Accepted/Active (accepted policy: manual)

email : user@synnefo.org

....

Based on the astakos-app configuration, there are several ways for a user to get verified and activated in order to be able to login. We discuss the user verification and activation flow in the following section.

User activation flow¶

A user can register for an account using the astakos signup form. Once the form is submited successfully a user entry is created in astakos database. That entry is passed through the astakos activation backend which handles whether the user should be automatically verified and activated.

Email verification¶

The verification process takes place in order to ensure that the user owns the email provided during the signup process. By default, after each successful signup astakos notifies user with an verification url via email.

At this stage:

- subsequent registrations invalidate and delete the previous registrations of the same email address.

- in case user misses the initial notification, additional emails can be send either via the url which is prompted to the user if he tries to login, or by the administrator using the

snf-manage user-activation-send <userid>command.- administrator may also enforce a user to get verified using the

snf-manage user-modify --verify <userid>command.

Account activation¶

Once the user gets verified, it is time for Astakos to decide whether or not to

proceed through user activation process. If ASTAKOS_MODERATION_ENABLED

setting is set to False (default value) user gets activated automatically.

In case the moderation is enabled Astakos may still automatically activate the user in the following cases:

- User email matches any of the regular expressions defined in

ASTAKOS_RE_USER_EMAIL_PATTERNS(defaults to[])- User used a signup method (e.g.

shibboleth) for which automatic activation is enabled (see authentication methods policies).

If all of the above fail to trigger automatic activation, an email is sent to

the persons listed in ACCOUNT_NOTIFICATIONS_RECIPIENTS setting,

notifying that there is a new user pending for moderation and that it’s up to

the administrator to decide if the user should be activated. The UI also shows

a corresponding ‘pending moderation’ message to the user. The administrator can

activate a user using the snf-manage user-modify command:

# command to activate a pending user

$ snf-manage user-modify --accept <userid>

# command to reject a pending user

$ snf-manage user-modify --reject --reject-reason="spammer" <userid>

Once the activation process finishes, a greeting message is sent to the user

email address and a notification for the activation to the persons listed in

ACCOUNT_NOTIFICATIONS_RECIPIENTS setting. Once activated the user is

able to login and access the Synnefo services.

Additional authentication methods¶

Astakos supports third party logins from external identity providers. This can be usefull since it allows users to use their existing credentials to login to astakos service.

Currently astakos supports the following identity providers:

- Shibboleth (module name

shibboleth)- Google (module name

- Twitter (module name

- LinkedIn (module name

To enable any of the above modules (by default only local accounts are

allowed) you have to install oauth2 package. To do so run:

apt-get install python-oauth2

Then retrieve and set the required provider settings and append the

module name in ASTAKOS_IM_MODULES.

# settings from https://code.google.com/apis/console/

ASTAKOS_GOOGLE_CLIENT_ID = '1111111111-epi60tvimgha63qqnjo40cljkojcann3.apps.googleusercontent.com'

ASTAKOS_GOOGLE_SECRET = 'tNDQqTDKlTf7_LaeUcWTWwZM'

# let users signup and login using their google account

ASTAKOS_IM_MODULES = ['local', 'google']

Authentication method policies¶

Astakos allows you to override the default policies for each enabled provider

separately by adding the approriate settings in your .conf files in the

following format:

ASTAKOS_AUTH_PROVIDER_<module>_<policy>_POLICY

Available policies are:

- CREATE Users can signup using that provider (default:

True)- REMOVE/ADD Users can remove/add login method from their profile (default:

True)- AUTOMODERATE Automatically activate users that signup using that provider (default:

False)- LOGIN Whether or not users can use the provider to login (default:

True).

e.g. to enable automatic activation for your academic users, while keeping locally signed up users under moderation you can apply the following settings.

ASTAKOS_AUTH_PROVIDER_SHIBBOLETH_AUTOMODERATE_POLICY = True

ASTAKOS_AUTH_PROVIDER_SHIBBOLETH_REMOVE_POLICY = False

User login¶

During the logging procedure, the user is authenticated by the respective identity provider.

If ASTAKOS_RECAPTCHA_ENABLED is set and the user fails several times

(ASTAKOS_RATELIMIT_RETRIES_ALLOWED setting) to provide the correct

credentials for a local account, he/she is then prompted to solve a captcha

challenge.

Upon success, the system renews the token (if it has expired), logins the user

and sets the cookie, before redirecting the user to the next parameter

value.

Projects and quota¶

Synnefo supports granting resources and controling their quota through the mechanism of projects. A project is considered as a pool of finite resources. Every actual resources allocated by a user (e.g. a Cyclades VM or a Pithos container) is also assigned to a project where the user is a member to. For each resource a project specifies the maximum amount that can be assigned to it and the maximum amount that a single member can assign to it.

Default quota¶

Upon user creation, a special purpose user-specific project is automatically created in order to hold the quota provided by the system. These system projects are identified with the same UUID as the user.

To inspect the quota that future users will receive by default through their

base projects, check column system_default in:

# snf-manage resource-list

You can modify the default system quota limit for all future users with:

# snf-manage resource-modify <resource_name> --system-default <value>

You can also control the default quota a new project offers to its members if a limit is not specified in the project application (project default). In particular, if a resource is not meant to be visible to the end user, then it’s best to set its project default to infinite.

# snf-manage resource-modify cyclades.total_ram --project-default inf

Grant extra quota through projects¶

A user can apply for a new project through the web interface or the API. Once it is approved by the administrators, the applicant can join the project and let other users in too.

A project member can make use of the quota granted by the project by specifying this particular project when creating a new quotable entity.

Note that quota are not accumulative: in order to allocate a 100GB disk, one must be in a project that grants at least 100GB; it is not possible to add up quota from different projects. Note also that if allocating an entity requires multiple resources (e.g. cpu and ram for a Cyclades VM) these must be all assigned to a single project.

Reclaiming resources¶

When a project is deactivated or a user is removed from a project, the quota

that have been granted to the user are revoked. If the user still owns

resources assigned to the project, the user quota appear overlimit on that

project. The services are responsible to inspect the overquota state of

users and reclaim their resources. For instance, cyclades provides

the management command enforce-resources-cyclades to reclaim VMs,

volumes, and floating IPs.

When a user is deactivated, their system project, owned projects and project memberships are suspended. Subsequently, the user’s resources can be reclaimed as explained above.

Control projects¶

To list pending project applications in astakos:

# snf-manage project-list --pending

Note the last column, the application id. To approve it:

# <app id> from the last column of project-list

# snf-manage project-control --approve <app id>

To deny an application:

# snf-manage project-control --deny <app id>

Before taking an action, on can inspect project status, settings and quota limits with:

# snf-manage project-show <project-uuid>

For an initialized project, option --quota also reports the resource

usage.

Users designated as project admins can approve or deny

an application through the web interface. In

20-snf-astakos-app-settings.conf set:

# UUIDs of users that can approve or deny project applications from the web.

ASTAKOS_PROJECT_ADMINS = [<uuid>, ...]

Set quota limits¶

One can change the quota limits of an initialized project with:

# snf-manage project-modify <project-uuid> --limit <resource_name> <member_limit> <project_limit>

One can set system quota for all accepted users (that is, set limits for system projects), with possible exceptions, with:

# snf-manage project-modify --all-system-projects --exclude <uuid1>,<uuid2> --limit ...

Quota for a given resource are reported for all projects that the user is member in with:

# snf-manage user-show <user-uuid> --quota

With option --projects, owned projects and memberships are also reported.

Astakos advanced operations¶

Adding “Terms of Use”¶

Astakos supports versioned terms-of-use. First of all you need to create an

html file that will contain your terms. For example, create the file

/usr/share/synnefo/sample-terms.html, which contains the following:

<h1>My cloud service terms</h1>

These are the example terms for my cloud service

Then, add those terms-of-use with the snf-manage command:

$ snf-manage term-add /usr/share/synnefo/sample-terms.html

Your terms have been successfully added and you will see the corresponding link appearing in the Astakos web pages’ footer.

During the account registration, if there are approval terms, the user is presented with an “I agree with the Terms” checkbox that needs to get checked in order to proceed.

In case there are new approval terms that the user has not signed yet, the

signed_terms_required view decorator redirects to the approval_terms

view, so the user will be presented with the new terms the next time he/she

logins.

Enabling reCAPTCHA¶

Astakos supports the reCAPTCHA feature.

If enabled, it protects the Astakos forms from bots. To enable the feature, go

to https://www.google.com/recaptcha/admin/create and create your own reCAPTCHA

key pair. Then edit /etc/synnefo/20-snf-astakos-app-settings.conf and set

the corresponding variables to reflect your newly created key pair. Finally, set

the ASTAKOS_RECAPTCHA_ENABLED variable to True:

ASTAKOS_RECAPTCHA_PUBLIC_KEY = 'example_recaptcha_public_key!@#$%^&*('

ASTAKOS_RECAPTCHA_PRIVATE_KEY = 'example_recaptcha_private_key!@#$%^&*('

ASTAKOS_RECAPTCHA_ENABLED = True

Restart the service on the Astakos node(s) and you are ready:

# /etc/init.d/gunicorn restart

Checkout your new Sign up page. If you see the reCAPTCHA box, you have setup everything correctly.

Astakos internals¶

X-Auth-Token¶

Alice requests a specific resource from a cloud service e.g.: Pithos. In the

request she supplies the X-Auth-Token to identify whether she is eligible to

perform the specific task. The service contacts Astakos through its

/account/v1.0/authenticate api call

providing the specific X-Auth-Token. Astakos checkes whether the token

belongs to an active user and it has not expired and returns a dictionary

containing user related information. Finally the service uses the uniq

field included in the dictionary as the account string to identify the user

accessible resources.

Django Auth methods and Backends¶

Astakos incorporates Django user authentication system and extends its User model.

Since username field of django User model has a limitation of 30 characters,

AstakosUser is uniquely identified by the email instead. Therefore,

astakos.im.authentication_backends.EmailBackend is served to authenticate a

user using email if the first argument is actually an email, otherwise tries

the username.

A new AstakosUser instance is assigned with a uui as username and also with a

auth_token used by the cloud services to authenticate the user.

astakos.im.authentication_backends.TokenBackend is also specified in order

to authenticate the user using the email and the token fields.

Logged on users can perform a number of actions:

- access and edit their profile via:

/im/profile.- change their password via:

/im/password- send feedback for grnet services via:

/im/send_feedback- logout (and delete cookie) via:

/im/logout

Internal Astakos requests are handled using cookie-based Django user sessions.

External systems should forward to the /login URI. The server,

depending on its configuration will redirect to the appropriate login page.

When done with logging in, the service’s login URI should redirect to the URI

provided with next, adding user and token parameters, which contain the email

and token fields respectively.

The login URI accepts the following parameters:

| Request Parameter Name | Value |

|---|---|

| next | The URI to redirect to when the process is finished |

| renew | Force token renewal (no value parameter) |

| force | Force logout current user (no value parameter) |

External systems inside the ASTAKOS_COOKIE_DOMAIN scope can acquire the

user information by the cookie identified by ASTAKOS_COOKIE_NAME setting

(set during the login procedure).

Finally, backend systems having acquired a token can use the ‘authenticate-api-label’ API call from a private network or through HTTPS.

File/Object Storage Service (Pithos+)¶

Pithos+ is the Synnefo component that implements a storage service and exposes the associated OpenStack REST APIs with custom extensions.

Pithos+ advanced operations¶

Enable separate domain for serving user content¶

Since Synnefo v0.15, there is a possibility to serve untrusted user content in an isolated domain.

Enabling this feature consists of the following steps:

Declare new domain in apache server

In order to enable the apache server to serve several domains it is required to setup several virtual hosts. Therefore, for adding the new domain e.g. “user-content.example.com”, append the following in

/etc/apache2/sites-available/synnefo-ssl:<VirtualHost _default_:443> ServerName user-content.example.com Alias /static "/usr/share/synnefo/static" # SetEnv no-gzip # SetEnv dont-vary AllowEncodedSlashes On RequestHeader set X-Forwarded-Proto "https" <Proxy * > Order allow,deny Allow from all </Proxy> SetEnv proxy-sendchunked SSLProxyEngine off ProxyErrorOverride off ProxyPass /static ! ProxyPass / http://localhost:8080/ retry=0 ProxyPassReverse / http://localhost:8080/ RewriteEngine On RewriteCond %{THE_REQUEST} ^.*(\\r|\\n|%0A|%0D).* [NC] RewriteRule ^(.*)$ - [F,L] SSLEngine on SSLCertificateFile /etc/ssl/certs/ssl-cert-snakeoil.pem SSLCertificateKeyFile /etc/ssl/private/ssl-cert-snakeoil.key </VirtualHost>

Note

Consider also to purchase and install a certificate for the new domain.

Finally, restart the apache server:

pithos-host$ /etc/init.d/apache2 restart

Register Pithos+ as an OAuth2 client in Astakos

Starting from Synnefo version 0.15, in order to view the content of a protected resource, Pithos+ (on behalf of the user) has to be granted authorization for the specific resource by Astakos.

During the authorization grant procedure, Pithos+ has to authenticate itself with Astakos since the latter has to prevent serving requests by unknown/unauthorized clients.

Therefore, in the installation guide you were guided to register Pithos+ as an OAuth2 client in Astakos.

Note

You can see the registered clients by running:: astakos-host$ snf-manage oauth2-client-list -o identifier,redirect_urls,is_trusted

However, requests originated from the new domain will be rejected since Astakos is ignorant about the new domain.

Therefore, you need to register a new client pointing to the unsafe domain. To do so, use the following command:

astakos-host$ snf-manage oauth2-client-add pithos-unsafe-domain --secret=<secret> --is-trusted --url https://user-content.example.com/pithos/ui/view

Note

You can also unregister the client pointing to the safe domain, since it will no longer be useful. To do so, run the following:

astakos-host$ snf-manage oauth2-client-remove pithos-view

Update Pithos+ configuration

Respectively, the

PITHOS_OAUTH2_CLIENT_CREDENTIALSsetting should be updated to contain the credentials of the client registered in the previous step.Furthermore, you need to restrict all the requests for user content to be served exclusively by the unsafe domain.

To enable this, set the

PITHOS_UNSAFE_DOMAINsetting to the value of the new domain e.g. “user-content.example.com”Finally, restart the gunicorn server:

pithos-host$ /etc/init.d/gunicorn restart

Pithos storage backend¶

Starting from Synnefo version 0.16, we introduce Archipelago as the new storage backend. Archipelago will act as a storage abstraction layer between Pithos and NFS, RADOS or any other storage backend driver that Archipelago supports. For more information about backend drivers please check Archipelago documentation.

Since this version care must be taken when restarting Archipelago on a Pithos worker node. Pithos acts as an Archipelago peer and must be stopped first before trying to restart Archipelago for any reason.

If you need to restart Archipelago on a running Pithos worker follow the procedure below:

pithos-host$ /etc/init.d/gunicorn stop

pithos-host$ /etc/init.d/archipelago restart

pithos-host$ /etc/init.d/gunicorn start

Compute/Network/Image Service (Cyclades)¶

Introduction¶

Cyclades is the Synnefo component that implements Compute, Network and Image services and exposes the associated OpenStack REST APIs. By running Cyclades you can provide a cloud that can handle thousands of virtual servers and networks.

Cyclades does not include any virtualization software and knows nothing about the low-level VM management operations, e.g. the handling of VM creation or migrations among physical nodes. Instead, Cyclades is the component that handles multiple Ganeti backends and exposes the REST APIs. The administrator can expand the infrastructure dynamically either by adding more Ganeti nodes or by adding new Ganeti clusters. Cyclades issue VM control commands to Ganeti via Ganeti’s remote API and receive asynchronous notifications from Ganeti backends whenever the state of a VM changes, due to Synnefo- or administrator-initiated commands.

Cyclades is the action orchestrator and the API layer on top of multiple Ganeti clusters. By this decoupled design, Ganeti cluster are self-contained and the administrator has complete control on them without Cyclades knowing about it. For example a VM migration to a different physical node is transparent to Cyclades.

Working with Cyclades¶

Flavors and Volume Types¶

When creating a VM, the user must specify the flavor of the virtual server. Flavors are the virtual hardware templates, and provide a description about the number of CPUs, the amount of RAM, and the size of the disk of the VM. Besides the size of the disk, Cyclades flavors describe the storage backend that will be used for the virtual server.

Flavors are created by the administrator and the user can select one of the available flavors. After VM creation, the user can resize his VM, by adding/removing CPU and RAM.

Cyclades support different storage backends that are described by the volume type of the flavor. Each volume type contains a disk template attribute which is mapped to Ganeti’s instance disk template. Currently the available disk templates are the following:

- file: regulars file

- sharedfile: regular files on a shared directory, e.g. NFS

- plain: logical volumes

- drbd: drbd on top of lvm volumes

- rbd: rbd volumes residing inside a RADOS cluster

- ext: disks provided by an external shared storage.

- ext_archipelago: External shared storage provided by Archipelago.

Volume types are created by the administrator using the snf-manage volume-type-create command and providing the disk template and a human-friendly name:

$ snf-manage volume-type-create --disk-template=drbd --name=DRBD

Flavors are created by the administrator using snf-manage flavor-create command. The command takes as argument number of CPUs, amount of RAM, the size of the disks and the volume type IDs and creates the flavors that belong to the cartesian product of the specified arguments. For example, the following command will create two flavors of 40G disk size of volume type with ID 1, 4G RAM and 2 or 4 CPUs.

$ snf-manage flavor-create 2,4 4096 40 1

To see the available flavors, run snf-manage flavor-list command. The administrator can delete a flavor by using flavor-modify command:

$ snf-manage flavor-modify --deleted=True <flavor_id>

Finally, the administrator can set if new servers can be created from a flavor or not, by setting the allow_create attribute:

$ snf-manage flavor-modify --allow-create=False <flavor_id>

Flavors that are marked with allow_create=False cannot be used by users to create new servers. However, they can still be used to resize existing VMs.

Images¶

When creating a VM the user must also specify the image of the virtual server. Images are the static templates from which VM instances are initiated. Cyclades uses Pithos to store system and user-provided images, taking advantage of all Pithos features, like deduplication and syncing protocol. An image is a file stored to Pithos with additional metadata that are describing the image, e.g. the OS family or the root partition. To create a new image, the administrator or the user has to upload it a file to Pithos, and then register it as an Image with Cyclades. Then the user can use this image to spawn new VMs from it.

Images can be private, public or shared between users, exactly like Pithos

files. Since user-provided public images can be untrusted, the administrator

can denote which users are trusted by adding them to the

UI_SYSTEM_IMAGES_OWNERS setting in the

/etc/synnefo/20-snf-cyclades-app-ui.conf file. Images of those users are

properly displayed in the UI.

When creating a new VM, Cyclades pass the location of the image and it’s metadata to Ganeti. After Ganeti creates the instance’s disk, snf-image will copy the image to the new disk and perform the image customization phase. During the phase, snf-image sends notifications to Cyclades about the progress of the image deployment and customization. Customization includes resizing the root file system, file injection (e.g. SSH keys) and setting a custom hostname. For better understanding of snf-image read the corresponding documentation.

For passing sensitive data about the image to Ganeti, like the VMs password, Cyclades keeps all sensitive data in memory caches (memcache) and never allows them to hit the disk. The data are exposed to snf-image via an one-time URL that is exposed from the vmapi application. So, instead of passing sensitive data to snf-image via Ganeti, Cyclades pass an one-time configuration URL that contains a random UUID. After snf-image gets the sensitive data, the URL is invalidated so no one else can access them.

The administrator can register images, exactly like users, using a system user

(a user that is defined in the UI_SYSTEM_IMAGES_OWNERS setting). For

example, the following command will register the

pithos://u53r-un1qu3-1d/images/debian_base-6.0-7-x86_64.diskdump as an

image to Cyclades:

$ kamaki image register --name="Debian Base" \

--location=pithos://u53r-un1qu3-1d/images/debian_base-6.0-7-x86_64.diskdump \

--public \

--disk-format=diskdump \

--property OSFAMILY=linux --property ROOT_PARTITION=1 \

--property description="Debian Squeeze Base System" \

--property size=451 --property kernel=2.6.32 --property GUI="No GUI" \

--property sortorder=1 --property USERS=root --property OS=debian

Deletion of an image is done via kamaki image unregister command, which will delete the Cyclades Images but will leave the Pithos file as is (unregister).

Apart from using kamaki to see and handle the available images, the administrator can use snf-manage image-list and snf-manage image-show commands to list and inspect the available public images. Also, the –user option can be used the see the images of a specific user.

Custom image listing sections¶

Since Synnefo 0.16.2, the installation wizard supports custom image listing

sections. Images with the LISTING_SECTION image property set, and whose

owner uuid is listed in the UI_IMAGE_LISTING_USERS Cyclades setting (in

/etc/synnefo/20-snf-cyclades-app-ui.conf) will be displayed in a separate

section in the installation wizard. The name of the new section will be the

value of the LISTING_SECTION image property.

Virtual Servers¶

As mentioned, Cyclades uses Ganeti for management of VMs. The administrator can

handle Cyclades VMs just like any other Ganeti instance, via gnt-instance

commands. All Ganeti instances that belong to Synnefo, are separated from

others, by a prefix in their names. This prefix is defined in

BACKEND_PREFIX_ID setting in

/etc/synnefo/20-snf-cyclades-app-backend.conf.

Apart from handling Cyclades VM at the Ganeti level, the administrator can also use the snf-manage server-* commands. These command cover the most common tasks that are relative with VM handling. Below we describe some of them, but for more information you can use the –help option of all snf-manage server-* commands. These command cover the most

The snf-manage server-create command can be used to create a new VM for some user. This command can be useful when the administrator wants to test Cyclades functionality without starting the API service, e.g. after an upgrade. Also, by using –backend-id option, the VM will be created in the specified backend, bypassing automatic VM allocation.

$ snf-manage server-create --flavor=1 --image=fc0f6858-f962-42ce-bf9a-1345f89b3d5e \

--user=7cf4d078-67bf-424d-8ff2-8669eb4841ea --backend-id=2 \

--password='example_passw0rd' --name='test_vm'

The above command will create a new VM for user 7cf4d078-67bf-424d-8ff2-8669eb4841ea in the Ganeti backend with ID 2. By default this command will issue a Ganeti job to create the VM (OP_INSTANCE_CREATE) and return. As in other commands, the –wait=True option can be used in order to wait for the successful completion of the job.

snf-manage server-list command can be used to list all the available servers. The command supports some useful options, like listing servers of a user, listing servers that exist in a Ganeti backend and listing deleted servers. Also, as in most of *-list commands, the –filter-by option can be used to filter the results. For example, the following command will only display the started servers of a specific flavor:

$ snf-manage server-list --filter-by="operstate=STARTED,flavor=<flavor_id>"

Another very useful command is the server-inspect command which will display all available information about the state of the server in DB and the state of the server in the Ganeti backend. The output will give you an easy overview about the state of the VM which can be useful for debugging.

Also the administrator can suspend a user’s VM, using the server-modify command:

$ snf-manage server-modify --suspended=True <server_id>

The user is forbidden to do any action on an administratively suspended VM, which is useful for abuse cases.

Ganeti backends¶

Since v0.11, Synnefo is able to manage multiple Ganeti clusters (backends) making it capable to scale linearly to tens of thousands of VMs. Backends can be dynamically added or removed via snf-manage commands.

Each newly created VM is allocated to a Ganeti backend by the Cyclades backend allocator. The VM is “pinned” to this backend, and can not change through its lifetime. The backend allocator decides in which backend to spawn the VM based on the policies set and the available resources of each backend, trying to balance the load between them. Also, Networks are created to all Ganeti backends, in order to ensure that VMs residing on different backends can be connected to the same networks.

A backend can be marked as drained in order to be excluded from automatic servers allocation and not receive new servers. Also, a backend can be marked as offline to denote that the backend is not healthy (e.g. broken master) and avoid the penalty of connection timeouts.

Finally, Cyclades is able to manage Ganeti backends with different enabled hypervisors (kvm, xen), and different enabled disk templates.

Listing existing backends¶

To list all the Ganeti backends known to Synnefo, we run:

$ snf-manage backend-list

Adding a new Ganeti backend¶

Backends are dynamically added under the control of Synnefo with snf-manage

backend-add command. In this section it is assumed that a Ganeti cluster,

named cluster.example.com is already up and running and configured to be

able to host Synnefo VMs.

To add this Ganeti cluster, we run:

$ snf-manage backend-add --clustername=cluster.example.com --user="synnefo_user" --pass="synnefo_pass"

where clustername is the Cluster hostname of the Ganeti cluster, and

user and pass are the credentials for the Ganeti RAPI user. All

backend attributes can be also changed dynamically using the snf-manage

backend-modify command.

snf-manage backend-add will also create all existing public networks to

the new backend. You can verify that the backend is added, by running

snf-manage backend-list.

Note that no VMs will be spawned to this backend, since by default it is in a

drained state after addition in order to manually verify the state of the

backend.

So, after making sure everything works as expected, make the new backend active

by un-setting the drained flag. You can do this by running:

$ snf-manage backend-modify --drained=False <backend_id>

Allocation of VMs in Ganeti backends¶

As already mentioned, the Cyclades backend allocator is responsible for allocating new VMs to backends. This allocator does not choose the exact Ganeti node that will host the VM but just the Ganeti backend. The exact node is chosen by the Ganeti cluster’s allocator (hail).

The decision about which backend will host a VM is based on the defined

policies and the available resources. The default filter allocator filters the

available backends based on the policy filters set and then computes a score

for each backend, that shows its load factor. The one with the minimum

score is chosen. The admin can exclude backends from the allocation phase by

marking them as drained by running:

$ snf-manage backend-modify --drained=True <backend_id>

The backend resources are periodically updated, at a period defined by

the BACKEND_REFRESH_MIN setting, or by running snf-manage

backend-update-status command. It is advised to have a cron job running this

command at a smaller interval than BACKEND_REFRESH_MIN in order to remove

the load of refreshing the backends stats from the VM creation phase.

Finally, the admin can decide to have a user’s VMs being allocated to a

specific backend, with the BACKEND_PER_USER setting. This is a mapping

between users and backends. If the user is found in BACKEND_PER_USER, then

Synnefo allocates all his/hers VMs to the specific backend in the variable,

even if is marked as drained (useful for testing).

Allocation based on disk-templates¶

Besides the filter policies and the available resources of each Ganeti backend, the allocator takes into consideration the disk template of the instance when trying to allocate it to a Ganeti backend. Specifically, the allocator checks if the flavor of the instance belongs to the available disk templates of each Ganeti backend.

A Ganeti cluster has a list of enabled disk templates (–enabled-disk-templates) and a list of allowed disk templates for new instances (–ipolicy-disk-templates). See the gnt-cluster manpage for more details about these options.

When Synnefo allocates an instance, it checks whether the disk template of the new instance belongs both in the enabled and ipolicy disk templates. You can see the list of the available disk-templates by running snf-manage backend-list. This list should be updated automatically after changing these options in Ganeti and it can also be updated by running snf-manage backend-update-status.

So the administrator, can route instances on different backends based on their flavor disk template, by modifying the enabled or ipolicy disk templates of each backend. Also, the administrator can route instances between different nodes of the same Ganeti backend, by modifying the same options at the nodegroup level (see gnt-group manpage for mor details).

Allocation based on custom filter¶

The default Synnefo allocator is the FilterAllocator which filters the available backends based on a configurable list of filters. The administrator can modify the backend allocation process by implementing her own filter and injecting it into the FilterAllocator’s filter chain.

Each custom filter must be a subclass of the FilterBase class, located at synnefo.logic.allocators.base, and implement the filter_backends method. Then she can insert the custom filter on the filter chain by altering the BACKEND_FILTER_ALLOCATOR_FILTERS setting to include her own filter on the proper position on the list.

The filter_backends method is used to filter the backends that the allocator can choose from. Altering this list, effectively affects the backends to be considered for allocation. It takes two arguments:

- A list of the available backends that have passed from the previous filters. In the beginning, the process is initiated with all the available backends (i.e. those that are neither drained nor offline). Each backend is a django object and an instance of the Backend model.

- A map with 4 keys representing the attributes of the VM to be allocated:

- ram: The size of the memory we want to allocate on the backend.

- disk: The size of the disk we want to allocate on the backend.

- cpu: The size of the CPU we want to allocate on the backend.

- project: The project of the VM we want to allocate.

The method returns a list of backends that can be chosen as the VM’s backend based on the filter’s policy.

Note that the disk template allocation policy is always applied irrespectively of BACKEND_ALLOCATOR_MODULE or the BACKEND_FILTER_ALLOCATOR_FILTER settings.

Allocation based on custom allocator¶

In order to determine which ganeti cluster is best for allocating a virtual machine, the allocator uses two methods:

- filter_backends

- allocate

The filter_backends method is used to filter the backends that the allocator shouldn’t even consider. It takes two arguements:

- A list of the available backends. A backend is available if it is not drained or offline. Each backend is a django object and an instance of the Backend model.

- A map with 4 keys:

- ram: The size of the memory we want to allocate on the backend.

- disk: The size of the disk we want to allocate on the backend.

- cpu: The size of the CPU we want to allocate on the backend.

- project: The project of the VM we want to allocate.

The allocate method returns the backend that will be used to allocate the virtual machine. It takes two arguements:

A list of the available backends. A backend is available if it is not drained or offline. Each backend is a django object and is an instance of the Backend model.

A map with 4 keys:

- ram: The size of the memory we want to allocate on the backend.

- disk: The size of the disk we want to allocate on the backend.

- cpu: The size of the CPU we want to allocate on the backend.

- project: The project of the VM we want to allocate.

So the administrator can create his own allocation algorithm by creating a class that inherits the AllocatorBase located at synnefo.logic.allocators.base, and implements the above methods.

If the administrator wants synnefo to use his allocation algorithm he just has to change the BACKEND_ALLOCATOR_MODULE setting to the path of his allocator class.

Removing an existing Ganeti backend¶

In order to remove an existing backend from Synnefo, you must first make sure that there are not active servers in the backend, and then run:

$ snf-manage backend-remove <backend_id>

Virtual Networks¶

Cyclades also implements the Network service and exposes the Quantum Openstack API. Cyclades supports full IPv4 and IPv6 connectivity to the public internet for it’s VMs. Also, Cyclades provides L2 and L3 virtual private networks, giving the user freedom to create arbitraty network topologies of interconnected VMs.

Public networking is desployment specific and must be customized based on the specific needs of the system administrator. Private virtual networks can be provided by different network technologies which are exposed as different network flavors. For better understanding of networking please refer to the Network section.

A Cyclades virtual network is an isolated Layer-2 broadcast domain. A network can also have an associated IPv4 and IPv6 subnet representing the Layer-3 characteristics of the network. Each subnet represents an IP address block that is used in order to assign addresses to VMs.

To connect a VM to a network, a port must be created, which represent a virtual port on a network switch. VMs are connected to networks by attaching a virtual interface to a port.

Cyclades also supports floating IPs, which are public IPv4 addresses that can dynamically(hotplug-able) be added and removed to VMs. Floating IPs are a quotable resource that is allocated to each user. Unlike other cloud platforms, floating IPs are not implemented using 1-1 NAT to a ports private IP. Instead, floating IPs are directly assigned to virtual interfaces of VMs.

Exactly like VMS, networks can be handled as Ganeti networks via gnt-network commands. All Ganeti networks that belong to Synnefo are named with the prefix ${BACKEND_PREFIX_ID}-net-. Also, there are a number of snf-manage commands that can be used to handle networks, subnets, ports and floating IPs. Below we will present a use case scenario using some of these commands. For better understanding of these commands, refer to their help messages.

Create a virtual private network for user 7cf4d078-67bf-424d-8ff2-8669eb4841ea using the PHYSICAL_VLAN flavor, which means that the network will be uniquely assigned a physical VLAN. The network is assigned an IPv4 subnet, described by it’s CIDR and gateway. Also, the –dhcp=True option is used, to make nfdhcpd response to DHCP queries from VMs.

$ snf-manage network-create --user=7cf4d078-67bf-424d-8ff2-8669eb4841ea --name=prv_net-1 \

--subnet=192.168.2.0/24 --gateway=192.168.2.1 --dhcp=True --flavor=PHYSICAL_VLAN

Inspect the state of the network in Cyclades DB and in all the Ganeti backends:

$ snf-manage network-inspect <network_id>

Inspect the state of the network’s subnet, containg an overview of the subnet’s IPv4 address allocation pool:

$ snf-manage subnet-inspect <subnet_id>

Connect a VM to the created private network. The port will automatically be assigned an IPv4 address from one of the network’s available IPs. This command will result in sending an OP_INSTANCE_MODIFY Ganeti command and attaching a NIC to the specified Ganeti instance.

$ snf-manage port-create --network=<network_id> --server=<server_id>

Inspect the state of the the port in Cyclades DB and in the Ganeti backend:

$ snf-manage port-inspect <port_id>

Disconnect the VM from the network and delete the network:

$ snf-manage port-remove <port_id>

$ snf-manage network-remove <network_id>

Enabling DHCP¶

When connecting a VM to a network, Cyclades will automatically assign an IPv4 address from the IPv4 or/and IPv6 subnets of the network. If the network has no subnets, then it will not be assigned any IP address.

If the network has DHCP enabled, then nfdhcpd daemon, which must be running on all Ganeti nodes, will respond to DHCP queries from VMs and assign to them the IP address that was allocated by Cyclades. DCHP can be enabled/disabled using the –dhcp option of network-create command.

Public network connectivity¶

Since v0.14, users are able to dynamically connect and disconnect their VMs from public networks. In order to do that, they have to use a floating IP. Floating IPs are basically public IPv4 addresses that can be dynamically attached and detached from VMs. The user creates a floating IP address from a network that has set the floating_ip_pool attribute. The floating IP is accounted to the user, who can then connect his VMs to public networks by creating ports that they are using this floating IP. Performing this work-flow from snf-manage would look like this:

$ snf-manage network-list --filter-by="floating_ip_pool=True"

id name user.uuid state public subnet.ipv4 gateway.ipv4 drained floating_ip_pool

---------------------------------------------------------------------------------------------

1 Internet None ACTIVE True 10.2.1.0/24 10.2.1.1 False True

$ snf-manage floating-ip-create --user=7cf4d078-67bf-424d-8ff2-8669eb4841ea --network=1

$ snf-manage floating-ip-list --user=7cf4d078-67bf-424d-8ff2-8669eb4841ea

id address network user.uuid server

------------------------------------------------------------------------

38 10.2.1.2 1 7cf4d078-67bf-424d-8ff2-8669eb4841ea 42

$ snf-manage port-create --user=7cf4d078-67bf-424d-8ff2-8669eb4841ea --network=1 \

--ipv4-address=10.2.1.2 --floating-ip=38

$ snf-manage port-list --user=7cf4d078-67bf-424d-8ff2-8669eb4841ea

id user.uuid mac_address network server_id fixed_ips state

--------------------------------------------------------------------------------------------------

163 7cf4d078-67bf-424d-8ff2-8669eb4841ea aa:00:00:45:13:98 1 77 10.2.1.2 ACTIVE

$ snf-manage port-remove 163

$ snf-manage floating-ip-remove 38

Users do not have permission to connect and disconnect VMs from public networks without using a floating IP address. However, the administrator have the ability to perform this tasks, using port-create and port-remove commands.

Network connectivity for newly created servers¶

When creating a virtual server, the user can specify the networks that the

newly created server will be connected to. Beyond this, the administrator can

define a list of networks that every new server will be forced to connect to.

For example, you can enforce all VMs to be connected to a public network

containing a metadata server. The networks must be specified in the

CYCLADES_FORCED_SERVER_NETWORKS that exists in the

/etc/synnefo/20-snf-cyclades-app-api.conf. For the networks in this

setting, no access control or quota policy are enforced!

Finally, the administrator can define a list of networks that new servers will

be connected, if the user has not specified networks in the request to create

the server. Access control and quota policy are enforced, just as if the user

had specified these networks. The list of these networks is defined in the

CYCLADES_DEFAULT_SERVER_NETWORKS that exists in the

/etc/synnefo/20-snf-cyclades-app-api.conf. This setting should only

be used if Cyclades are being accessed by external clients that are

unaware of the Neutron API extensions in the Compute API.

Each member of the above mentioned settings can be:

- a network UUID

- a tuple of network UUIDs: the server will be connected to only one of these networks, e.g. one that has a free IPv4 address

- SNF:ANY_PUBLIC_IPV4: the server will be connected to any network with an IPv4 subnet defined

- SNF:ANY_PUBLIC_IPV6: the server will be connected to any network with only an IPv6 subnet defined.

- SNF:ANY_PUBLIC: the server will be connected to any public network.

Public IP accounting¶

There are many use cases, e.g. abuse ports, where you need to find which user or which server had a public IP address. For this reason, Cyclades keeps track of the usage of public IPv4/IPv6 addresses. Specifically, when an IP address is attached to or detached from a virtual server, the timestamp of the action is logged. This information can be found using the ip-list command:

$ snf-manage ip-list

Show usage of a specific address:

$ snf-manage ip-list --address=192.168.2.1

Show public IPs of a specific server:

$ snf-manage ip-list --server=<server_id>

Managing Network Resources¶

Proper operation of the Cyclades Network Service depends on the unique assignment of specific resources to each type of virtual network. Specifically, these resources are:

- IP addresses. Cyclades creates a Pool of IPs for each Network, and assigns a unique IP address to each VM, thus connecting it to this Network. You can see the IP pool of each network by running snf-manage subnet-inspect <subnet_ID>. IP pools are automatically created and managed by Cyclades, depending on the subnet of the Network.

- Bridges corresponding to physical VLANs, which are required for networks of type PRIVATE_PHYSICAL_VLAN.

- One Bridge corresponding to one physical VLAN which is required for networks of type PRIVATE_MAC_PREFIX.

IPv4 addresses¶

An allocation pool of IPv4 addresses is automatically created for every network with an IPv4 subnet. By default, the allocation pool contains the range of IP addresses that are included in the subnet, except from the gateway and the broadcast address of the network. The range of IP addresses can be restricted using the –allocation-pool option of snf-manage network-create command. The admin can externally reserve IP addresses to exclude them from automatic allocation with the –add-reserved-ips option of snf-manage network-modify command. For example the following command will reserve two IP addresses from network with ID 42:

snf-manage network-modify --add-reserved-ips=10.0.0.21,10.0.0.22 42

Warning

Externally reserving IP addresses is also available at the Ganeti. However, when using Cyclades with multiple Ganeti backends, the handling of IP pools must be performed from Cyclades!

Bridges¶

As already mentioned Cyclades use a pool of Bridges that must correspond to Physical VLAN at the Ganeti level. A bridge from the pool is assigned to each network of flavor PHYSICAL_VLAN. Creation of this pool is done using snf-manage pool-create command. For example the following command will create a pool containing the bridges from prv1 to prv21.

# snf-manage pool-create --type=bridge --base=prv --size=20

You can verify the creation of the pool, and check its contents by running:

# snf-manage pool-list

# snf-manage pool-show --type=bridge 1

Finally you can use the pool-modify management command in order to externally reserve the values from pool, extend or shrink the pool if possible.

MAC Prefixes¶

Cyclades also use a pool of MAC prefixes to assign to networks of flavor MAC_FILTERED. The handling of this pool is done exactly as with pool of bridges, except that the type option must be set to mac-prefix:

# snf-manage pool-create --type=mac-prefix --base=aa:00:0 --size=65536

The above command will create a pool of MAC prefixes from aa:00:1 to

b9:ff:f. The MAC prefix pool is responsible for providing only unicast and

locally administered MAC addresses, so many of these prefixes will be

externally reserved, to exclude from allocation.

Quotas¶

The handling of quotas for Cyclades resources is powered by Astakos quota mechanism. During registration of Cyclades service to Astakos, the Cyclades resources are also imported to Astakos for accounting and presentation.

Upon a request that will result in a resource creation or removal, Cyclades will communicate with Astakos to ensure that user quotas are within limits and update the corresponding usage. If a limit is reached, the request will be denied with an overLimit(413) fault.

The resources that are exported by Cyclades are the following:

- cyclades.vm: Number of virtual machines

- cyclades.total_cpu: Number of virtual machine processors

- cyclades.cpu: Number of virtual machine processors of running VMs

- cyclades.total_ram: Virtual machine memory size

- cyclades.ram: Virtual machine memory size of running VMs

- cyclades.disk: Virtual machine disk size

- cyclades.floating_ip: Number of floating IP addresses

- cyclades.network.private: Number of private virtual networks

Enforcing quotas¶

User quota can get overlimit, for example when a user is removed from a project granting Cyclades resources. However, no action is automatically taken to restrict users to their new limits. There is a special tool for quota enforcement:

# snf-manage enforce-resources-cyclades

This command will check and report which users are overlimit on their Cyclades quota; it will also suggest actions to be taken in order to enforce quota limits, dependent on the overlimit resource:

- cyclades.vm: Delete VMs

- cyclades.total_cpu: Delete VMs

- cyclades.cpu: Shutdown VMs

- cyclades.total_ram: Delete VMs

- cyclades.ram: Shutdown VMs

- cyclades.disk: Delete volumes (may also trigger VM deletion)

- cyclades.floating_ip: Detach and delete IPs

VMs to be deleted/shutdown are chosen first by state in the following order: ERROR, BUILD, STOPPED, STARTED or RESIZE and then by decreasing ID. When needing to remove IPs, we first choose IPs that are free, then those attached to VMs, using the same VM ordering.

You need to specify the resources to be checked, using the option

--resources. A safe first attempt would be to specify

cyclades.cpu,cyclades.ram, that is, to check the less dangerous resources,

those that do not result in deleting any VM, volume, or IP.

If you want to handle overlimit quota in a safer way for resources that

would normally trigger a deletion, you can use the option

--soft-resources. Enforcing e.g. cyclades.vm in a “soft” way will

shutdown the VMs rather than deleting them. This is useful as an initial

warning for a user who is overquota; but notice that the user may restart

their shutdown VMs, if the resources that control starting VMs allows them

to do so.

With option --list-resources you can inspect the available resources

along with the related standard and soft enforce actions. It is also

possible to specify users and projects to be checked or excluded.

Actual enforcement is done with option --fix. In order to control the

load that quota enforcement may cause on Cyclades, one can limit the number

of operations per backend. For example,

# snf-manage enforce-resources-cyclades --fix --max-operations 10

will apply only the first 10 listed actions per backend. One can repeat the operation, until nothing is left to be done.

To control load a timeout can also be set for shutting down VMs (using

option --shutdown-timeout <sec>). This may be needed to avoid

expensive operations triggered by shutdown, such as Windows updates.

The command outputs the list of applied actions and reports whether each action succeeded or not. Failure is reported if for any reason cyclades failed to process the job and submit it to the backend.

Cyclades advanced operations¶

Reconciliation mechanism¶

Cyclades - Ganeti reconciliation¶

On certain occasions, such as a Ganeti or RabbitMQ failure, the state of Cyclades database may differ from the real state of VMs and networks in the Ganeti backends. The reconciliation process is designed to synchronize the state of the Cyclades DB with Ganeti. There are two management commands for reconciling VMs and Networks that will detect stale, orphans and out-of-sync VMs and networks. To fix detected inconsistencies, use the –fix-all option.

$ snf-manage reconcile-servers

$ snf-manage reconcile-servers --fix-all

$ snf-manage reconcile-networks

$ snf-manage reconcile-networks --fix-all

Please see snf-manage reconcile-servers --help and snf-manage

reconcile--networks --help for all the details.

Cyclades - Astakos reconciliation¶

As already mentioned, Cyclades communicates with Astakos for resource accounting and quota enforcement. In rare cases, e.g. unexpected failures, the two services may get unsynchronized. For this reason there are the reconcile-commissions-cyclades and reconcile-resources-cyclades command that will synchronize the state of the two services. The first command will detect any pending commissions, while the second command will detect that the usage that is reported by Astakos is correct. To fix detected inconsistencies, use the –fix option.

$ snf-manage reconcile-commissions-cyclades

$ snf-manage reconcile-commissions-cyclades --fix

$ snf-manage reconcile-resources-cyclades

$ snf-manage reconcile-resources-cyclades --fix

Cyclades resources reconciliation¶

Reconciliation of pools will check the consistency of available pools by checking that the values from each pool are not used more than once, and also that the only reserved values in a pool are the ones used. Pool reconciliation will check pools of bridges, MAC prefixes, and IPv4 addresses for all networks. To fix detected inconsistencies, use the –fix option.

$ snf-manage reconcile-pools

$ snf-manage reconcile-pools --fix

snf-vncauthproxy configuration¶

Since snf-vncauthproxy-1.6 and snf-cyclades-app-0.16, it is possible

to run snf-vncauthproxy on a separate node and have multiple snf-vncauthproxy

instances / nodes, to serve clients.

The CYCLADES_VNCAUTHPROXY_OPTS setting has become a list of dictionaries,

each of which defines one snf-vncauthproxy instance. Each vncauthproxy should

be properly configured to accept control connections by the Cylades host (via

the --listen-address CLI parameter of snf-vncauthproxy) and VNC connections

from clients (via the --proxy-listen-address CLI parameter.

For a two-node vncauthproxy setup, the CYCLADES_VNCAUTHPROXY_OPTS would

look like:

CYCLADES_VNCAUTHPROXY_OPTS = [

{

'auth_user': 'synnefo',

'auth_password': 'secret_password',

'server_address': 'node1.synnefo.live',

'server_port': 24999,

'enable_ssl': True,

'ca_cert': '/path/to/cacert',

'strict': True,

},

{

'auth_user': 'synnefo',

'auth_password': 'secret_password',

'server_address': 'node2.synnefo.live',

'server_port': 24999,

'enable_ssl': False,

'ca_cert': '/path/to/cacert',

'strict': True,

},

]

The server_address is the host / IP which Cyclades will use for the control

connection, in order to set up the forwarding.

The vncauthproxy DAEMON_OPTS option in /etc/default/vncauthproxy would

look like:

DAEMON_OPTS="--pid-file=$PIDFILE --listen-address=node1.synnefo.live --proxy-listen-address=node1.synnefo.live"

The --proxy-listen-address is the host / IP which clients (Web browsers /

VNC clients) will use to connect to snf-vncauthproxy.

In case that snf-vncauthproxy doesn’t run on the same node as the Cyclades

node, it is highly recommended to enable SSL on the control socket, using

strict verification of the server certificate. The only caveat, for the time

being, is that the same certificate, provided to snf-vncauthproxy, is used for

both the control and the client connections. If the control and client host

(--listen-address and --proxy-listen-address parameters, respectively)

differ, you should make sure to generate a certificate covering both (using the

one as common name / CN, and specifying the other as a subject alternative

name).

VM stats collecting¶

snf-cyclades-gtools comes with a collectd plugin to collect CPU and network stats for Ganeti VMs and an example collectd configuration. snf-stats-app is a Django (snf-webproject) app that serves the VM stats graphs by reading the VM stats (from RRD files) and serves graphs.

The snf-stats-app was originally written by GRNET NOC as a WSGI Python app and was ported to a Synnefo (snf-webproject) app.

snf-stats-app configuration¶

The snf-stats-app node should have collectd installed. The collectd

configuration should enable the network plugin, assuming the server role, and

the RRD plugin / backend, to store the incoming stats. Your

/etc/collectd/collectd.conf should look like:

FQDNLookup true

LoadPlugin syslog

<Plugin syslog>

LogLevel info

</Plugin>

LoadPlugin network

LoadPlugin rrdtool

<Plugin network>

TimeToLive 128

<Listen "okeanos.io" "25826">

SecurityLevel "Sign"

AuthFile "/etc/collectd/passwd"

</Listen>

ReportStats false

MaxPacketSize 65535

</Plugin>

<Plugin rrdtool>

DataDir "/var/lib/collectd/rrd"

CacheTimeout 120

CacheFlush 900

WritesPerSecond 30

RandomTimeout 0

</Plugin>

Include "/etc/collectd/filters.conf"

Include "/etc/collectd/thresholds.conf"

An example collectd config file is provided in

/usr/share/doc/snf-stats-app/examples/stats-colletcd.conf.

The recommended deployment is to run snf-stats-app using gunicorn with an

Apache2 or nginx reverse proxy (using the same configuration as the other

Synnefo services / apps). An example gunicorn config file is provided in

/usr/share/doc/snf-stats-app/examples/stats.gunicorn.

Make sure to edit the settings under

/etc/synnefo/20-snf-stats-app-settings.conf to match your deployment.

More specifically, you should change the STATS_BASE_URL setting (refer

to previous documentation on the BASE_URL settings used by the other Synnefo

services / apps) and the RRD_PREFIX and GRAPH_PREFIX settings.

You should also set the STATS_SECRET_KEY to a random string and make sure

it’s the same at the CYCLADES_STATS_SECRET_KEY on the Cyclades host (see

below).

RRD_PREFIX is the directory where collectd stores the RRD files. The

default setting matches the default RRD directory for the collectd RRDtool

plugin. In a more complex setup, the collectd daemon could run on a separate

host and export the RRD directory to the snf-stats-app node via e.g. NFS.

GRAPH_PREFIX is the directory where collectd stores the resulting

stats graphs. You should create it manually, in case it doesn’t exist.

# mkdir /var/cache/snf-stats-app/

# chown www-data:wwwdata /var/cache/snf-stats-app/

The snf-stats-app will typically run as the www-data user. In that case,

make sure that the www-data user should have read access to the

RRD_PREFIX directory and read / write access to the GRAPH_PREFIX

directory.

snf-stats-app, based on the STATS_BASE_URL setting will export the

following URL ‘endpoints`:

- CPU stats bar:

STATS_BASE_URL/v1.0/cpu-bar/<encrypted VM hostname>- Network stats bar:

STATS_BASE_URL/v1.0/net-bar/<encrypted VM hostname>- CPU stats daily graph:

STATS_BASE_URL/v1.0/cpu-ts/<encrypted VM hostname>- Network stats daily graph:

STATS_BASE_URL/v1.0/net-ts/<encrypted VM hostname>- CPU stats weekly graph:

STATS_BASE_URL/v1.0/cpu-ts-w/<encrypted VM hostname>- Network stats weekly graph:

STATS_BASE_URL/v1.0/net-ts-w/<encrypted VM hostname>

You can verify that these endpoints are exported by issuing:

# snf-manage show_urls

snf-cyclades-gtools configuration¶

To enable VM stats collecting, you will need to:

Install collectd on every Ganeti (VM-capable) node.

Enable the Ganeti stats plugin in your collectd configuration. This can be achieved by either copying the example collectd conf file that comes with snf-cyclades-gtools (

/usr/share/doc/snf-cyclades-gtools/examples/ganeti-stats-collectd.conf) or by adding the following line to your existing (or default) collectd conf file:Include /etc/collectd/ganeti-stats.conf

In the latter case, make sure to configure collectd to send the collected stats to your collectd server (via the network plugin). For more details on how to do this, check the collectd example config file provided by the package and the collectd documentation.

snf-cyclades-app configuration¶

At this point, stats collecting should be enabled and working. You can check

that everything is ok by checking the contents of /var/lib/collectd/rrd/

directory (it will gradually get populated with directories containing RRD

files / stats for every Synnefo instances).

You should also check that gunicorn and Apache2 are configured correctly by

accessing the graph URLs for a VM (whose stats have been populated in

/var/lib/collectd/rrd).

Cyclades uses the CYCLADES_STATS_SECRET_KEY setting in

20-snf-cyclades-app to encrypt the instance hostname in the stats graph

URL. This settings should be set to a random value and match the

STATS_SECRET_KEY on the Stats host.

Cyclades (snf-cyclades-app) fetches the stat graphs for VMs based on four

settings in 20-snf-cyclades-app-api.conf. The settings are:

- CPU_BAR_GRAPH_URL = ‘https://stats.host/stats/v1.0/cpu-bar/%s‘

- CPU_TIMESERIES_GRAPH_URL = ‘https://stats.host/stats/v1.0/cpu-ts/%s‘

- NET_BAR_GRAPH_URL = ‘https://stats.host/stats/v1.0/net-bar/%s‘

- NET_TIMESERIES_GRAPH_URL = ‘https://stats.host/stats/v1.0/net-ts/%s‘

Make sure that you change this settings to match your STATS_BASE_URL

(and generally the Apache2 / gunicorn deployment on your stats host).

Cyclades will pass these URLs to the Cyclades UI and the user’s browser will fetch them when needed.

Helpdesk¶

The Helpdesk application provides the ability to view the virtual servers and networks of all users, along with the ability to perform some basic actions like administratively suspending a server. You can perform look-ups by user UUID or email, by server ID (vm-$id) or by an IPv4 address.

If you want to activate the helpdesk application you can set to True the HELPDESK_ENABLED setting. Access to helpdesk views (under $BASE_URL/helpdesk) is only allowed to users that belong to Astakos groups defined in the HELPDESK_PERMITTED_GROUPS setting, which by default contains the helpdesk group. For example, to allow <user_id> to access helpdesk view, you should run the following command in the Astakos node:

snf-manage group-add helpdesk

snf-manage user-modify --add-group=helpdesk <user_id>

Cyclades internals¶

Asynchronous communication with Ganeti backends¶

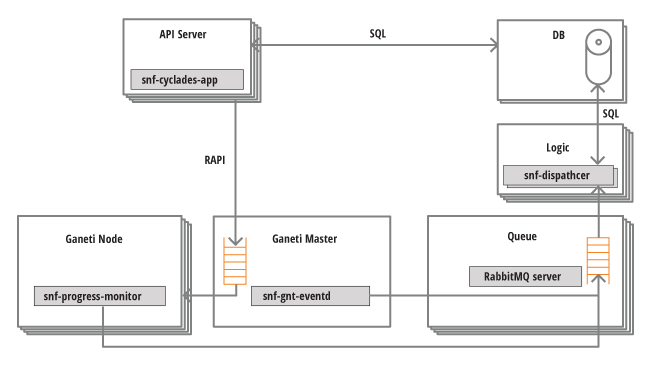

Synnefo uses Google Ganeti backends for VM cluster management. In order for Cyclades to be able to handle thousands of user requests, Cyclades and Ganeti communicate asynchronously. Briefly, requests are submitted to Ganeti through Ganeti’s RAPI/HTTP interface, and then asynchronous notifications about the progress of Ganeti jobs are being created and pushed upwards to Cyclades. The architecture and communication with a Ganeti backend is shown in the graph below:

The Cyclades API server is responsible for handling user requests. Read-only requests are directly served by looking up the Cyclades DB. If the request needs an action in the Ganeti backend, Cyclades submit jobs to the Ganeti master using the Ganeti RAPI interface.

While Ganeti executes the job, snf-ganeti-eventd, and snf-progress-monitor are monitoring the progress of the job and send corresponding messages to the RabbitMQ servers. These components are part of snf-cyclades-gtools and must be installed on all Ganeti nodes. Specially:

- snf-ganeti-eventd sends messages about operations affecting the operating state of instances and networks. Works by monitoring the Ganeti job queue.

- snf-progress_monitor sends messages about the progress of the Image deployment phase which is done by the Ganeti OS Definition snf-image.

Finally, snf-dispatcher consumes messages from the RabbitMQ queues, processes these messages and properly updates the state of the Cyclades DB. Subsequent requests to the Cyclades API, will retrieve the updated state from the DB.

Admin Dashboard (Admin)¶

Introduction¶

Admin is the Synnefo component that provides to trusted users the ability to manage and view various different Synnefo entities such as users, VMs, projects etc. Additionally, it automatically generates charts and statistics using data from the Astakos/Cyclades stats.

Access and permissions¶

The Admin dashboard can be accessed by default from the ADMIN_BASE_URL URL.

Since there is no login form, the user must login on Astakos first and then

visit the above URL. Access will be granted only to users that belong to a

predefined list of Astakos groups. By default, there are three group categories

that are mapped 1-to-1 to Astakos groups:

- ADMIN_READONLY_GROUP: ‘admin-readonly’

- ADMIN_HELPDESK_GROUP: ‘helpdesk’

- ADMIN_GROUP: ‘admin’

The group categories can be changed using the ADMIN_PERMITTED_GROUPS

setting. In order to change the Astakos group that a category corresponds to,

the administrator can specify the group that he/she wants in the

ADMIN_READONLY_GROUP, ADMIN_HELPDESK_GROUP or ADMIN_GROUP settings.

Note that while any user that belongs to the ADMIN_PERMITTED_GROUPS has the

same access to the administrator dashboard, the actions that are allowed for a

group may differ. That’s because Admin implements a Role-Based Access Control

(RBAC) policy, which can be changed from the ADMIN_RBAC setting. By

default, users in the ADMIN_READONLY_GROUP cannot perform any actions. On

the other hand, users in the ADMIN_GROUP can perform all actions. In the

middle of the spectrum is the ADMIN_HELPDESK_GROUP, which by default

performs a small subset of reversible actions.

Seting up Admin¶

Admin is bundled by default with a list of sane settings. The most important

one, ADMIN_ENABLED, is set to True and defines whether Admin will be used

or not.

The administrator simply has to create the necessary Astakos groups and add trusted users in them. The following example will create an admin group and will add a user in it:

snf-manage group-add admin

snf-manage user-modify --add-group=admin <user_id>

Finally, the administrator must edit the 20-snf-admin-app-general.conf

settings file, uncomment the ADMIN_BASE_URL setting and assign the

appropriate URL to it. In most cases, this URL will be the top-level URL of the

Admin node, with the optional addition of an extra path (e.g. /admin) in

order to distinguish it from different components.

That’s all that is required for a single-node setup. For a multi-node setup, please consult the following section:

Multi-node Setup¶

Admin by design does not use the Astakos/Cyclades API for any action. Instead, it requires direct access to the Astakos/Cyclades database as well as the settings of their nodes. As a result, when installing Admin in a node, the Astakos and Cyclades packages will also be installed.

In order to disable the Astakos/Cyclades API in the Admin node, the

administrator can add the following line in 99-local.conf (you can create

it if doesn’t exist):

ROOT_URLCONF="synnefo_admin.urls"

Note that the above change does not interfere with the ADMIN_BASE_URL,

which will be used normally.

Furthermore, if Astakos and Cyclades have separate databases, then they must be

defined in the DATABASES setting of 10-snf-webproject-database.conf. An

example setup is the following:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql_psycopg2',

'NAME': 'snf_apps_cyclades',

'HOST': <Cyclades host>,

<...snip..>

}, 'cyclades': {

'ENGINE': 'django.db.backends.postgresql_psycopg2',

'NAME': 'snf_apps_cyclades',

'HOST': <Cyclades host>,

<...snip..>

}, 'astakos': {

'ENGINE': 'django.db.backends.postgresql_psycopg2',

'NAME': 'snf_apps_astakos',

'HOST': <Astakos host>,

<...snip..>

}

}

DATABASE_ROUTERS = ['snf_django.utils.routers.SynnefoRouter']

You may notice that there are three databases instead of two. That’s because

Django requires that every DATABASES setting has a default database. In

our case, we suggest that you use as default the Cyclades database.

You should also make sure not to enable database (psycopg) connection pooling

(as described in the installation guide)

by omitting (deleting or commenting out) all the relevant pooling options from

the DB configuration (i.e. the synnefo_poolsize option).

Finally, you must not forget to add the DATABASE_ROUTERS setting in the

above example that must always be used in multi-db setups.

Disabling Admin¶

The easiest way to disable the Admin Dashboard is to set the ADMIN_ENABLED

setting to False.

Synnefo management commands (“snf-manage”)¶

Each Synnefo service, Astakos, Pithos and Cyclades is controlled by the administrator using the “snf-manage” admin tool. This tool is an extension of the Django command-line management utility. It is run on the host that runs each service and provides different types of commands depending on the services running on the host. If you are running more than one service on the same host “snf-manage” adds all the corresponding commands for each service dynamically, providing a unified admin environment.

To run “snf-manage” you just type:

# snf-manage <command> [arguments]

on the corresponding host that runs the service. For example, if you have all services running on different physical hosts you would do:

root@astakos-host # snf-manage <astakos-command> [argument]

root@pithos-host # snf-manage <pithos-command> [argument]